FLI September 2022 Newsletter: $3M Impacts of Nuclear War Grants Program!

Contents

Welcome to the FLI newsletter! Every month, we bring 24,000+ subscribers the latest news on how emerging technologies are transforming our world – for better and worse.

Long-time subscribers might notice that we’ve redesigned our newsletter. Let us know what you think by replying to this email! If your friends or colleagues might benefit from this newsletter, ask them to subscribe here.

Today’s newsletter is a 4-minute read. We cover:

- Our new $3M grants program for research about the impacts of nuclear war

- A panel discussion about General Purpose A.I.

- New research about climate tipping points

- Petrov Day

Announcing the Humanitarian Impacts of Nuclear War Grants!

We’re launching a new grants program for academics and researchers to improve scientific forecasts about the consequences of nuclear war.

This initiative builds on the 2022 Future of Life Award, which we gave to the pioneers who discovered nuclear winter and brought it to the world’s attention. Their efforts ultimately convinced Reagan and Gorbachev to end the Cold War nuclear arms race.

Given today’s geopolitical environment, we urgently need new science and advocacy that can compel policymakers to reduce the risk of nuclear conflict. Thats why we’re providing grants worth at least three million dollars to researchers in academic and non-profit institutions.

A typical grant award will range between $100,000 to $500,000 and will usually support a project for up to three years. Proposed research projects can address issues such as nuclear use scenarios, fuel loads and urban fires, climate modelling, nuclear security doctrines and more.

Visit our website for additional information about the grant and how to apply. Letters of intent are due on November 15, 2022.

Applications Open for the Vitalik Buterin PhD and Postdoctoral Fellowship

We are accepting applications for the Vitalik Buterin PhD and Postdoctoral Fellowship focused on A.I. Existential Safety.

Current and future PhD students are welcome to apply for the PhD fellowship. Fellows will receive funding to cover tuition, fees, and the stipend of the student’s PhD program up to $40,000 and $10,000 for research-related expenses. Postdoctoral fellows will receive an $80,000 annual stipend and up to $10,000 for research-related expenses.

Visit our application portal for additional information. The deadline to apply for the PhD fellowship is November 15, 2022. For the Postdoctoral fellowship, applications are due by January 2, 2023.

Nominate a Candidate for the Future of Life Award!

The Future of Life Award is a $50,000 prize given to individuals who, without much recognition at the time, helped make today dramatically better than it may have otherwise been. Previous winners include individuals who eradiacted smallpox, fixed the ozone layer, banned biological weapons, and prevented nuclear wars.

Follow these instructions to nominate a candidate. If we decide to give the award to your nominee, you will receive a $3,000 prize!

We’re Recruiting!

We’re looking for a Director of U.S. Policy to engage with lawmakers and regulators to future-proof A.I. policy. Applicants should be based in Washington D.C., Sacramento, or San Fransisco. More details here.

Governance and Policy Updates

A.I. Policy:

- The U.K. National Cyber Security Centre published security principles for systems using machine learning.

- The European Commission issued its A.I. Liability Directive.

Nuclear Security:

- The tenth review conference of the parties to the Treaty on the Non-Proliferation of Nuclear Weapons concluded on August 26 without a consensus.

- Five nations signed, and two ratified the Treaty on the Prohibition of Nuclear Weapons. This brings the tally to 91 signatories and 68 state parties.

Biotechnology:

- The World Health Organization published a global guidance framework on how nations should govern dual-use technologies and prevent biological risks.

- The U.S. Government announced new initiatives and investments in biotechnology and biomanufacturing.

Climate Change:

- The U.S. Senate ratified the Kigali Amendment to the Montreal Protocol, agreeing to phase out the use of hydrofluorocarbons.

Updates from FLI

FLI’s Vice-President for Policy and Strategy, Anthony Aguirre, spoke on a panel hosted by the Centre for Data Innovation about how the E.U. should regulate general-purpose A.I. systems (GPAI).

The other panelists were Kai Zenner (Digital Policy Advisor to MEP Axel Voss); Alexandra Belias (DeepMind); Andrea Miotti (Conjecture); Irene Solaiman (Hugging Face); and Hodan Omaar (Center for Data Innovation).

- Additional Context: Earlier in May, the French Government proposed that the A.I. Act should regulate GPAI. FLI has advocated for this position since the E.U. released its first draft of the A.I. Act last year. More recently, our E.U. Policy Researcher Risto Uuk co-authored an article arguing that regulating GPAI is essential to future-proofing the A.I. Act.

- Watch the full discussion here:

New Research

Open source A.I.: A new paper by the Brookings Institute warns that the E.U.’s efforts to regulate open source A.I. might stifle innovation.

- Why does this matter? Open source text-to-image models have opened up a can of worms. On the one hand, they accelerate innovation. On the other, they’re often trained on toxic data and used for malicious purposes.

- Counter-point: Researchers worry that developers might attempt to skirt regulation by releasing open source models and that regulators are being presented a false binary between innovation and regulation.

Climate tipping points: New research published in Science shows that the Paris Agreement’s warming target of 1.5°C compared to pre-industrial levels might not be enough to save us from climate tipping points.

- Why does this matter? We aren’t even on track to meet the Agreements target in a business-as-usual scenario.

- Dive deeper: In 2019, FLI interviewed Tim Lenton – one of the paper’s co-authors – about climate tipping points. Listen to the conversation on our podcast here.

What We’re Reading

Stockpile stewardship: What does it take to look after the U.S. I.C.B.M. stockpile? A lot of money and decades-old spare parts, according to W.J. Henninghan at Time magazine. Is it worth it? He’s not so sure.

Language models: Yan LeCun – Chief A.I. Scientist at Meta – doesn’t think Language Models will ever be capable of thinking like us.

Planetary defence: NASA launched a spacecraft at an asteroid to deflect its orbit – a first-of-its-kind planetary defence test.

Open and responsible A.I. licensing: Hugging Face is advocating for licences that are largely open, but embed some use-case restrictions.

Hindsight 20/20

On September 26 1983, Stanislav Petrov, a lieutenant colonel of the Soviet Air Defense Forces, saved the world from nuclear disaster.

A faulty computer reading suggested that the U.S. had launched five intercontinental ballistic missiles. If Petrov had reported this to his superiors immediately, nuclear war would have almost certainly followed.

Fortunately, Petrov kept his cool. Acting on an educated hunch, he chose to report that it was a false alarm. He was right: An investigation later revealed that Soviet satellites had picked up sunlight reflecting off clouds.

Petrov was never rewarded for his role in preventing nuclear war. In fact, he was reprimanded. We tried to honour him and his service to humanity with the 2018 Future of Life Award.

What if it wasn’t Petrov on duty that day? We have had several close calls with nuclear weapons before and since. How many more can we survive?

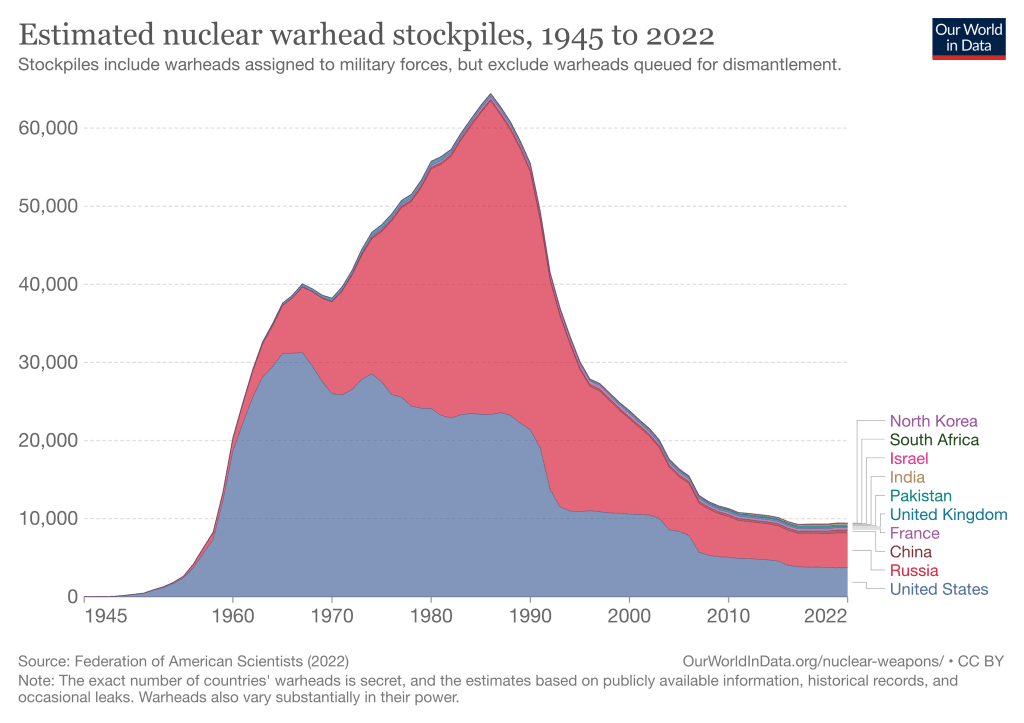

Chart of the Month

September 26 was the International Day for the Total Elimination of Nuclear Weapons. How close are we to that reality? Here’s a handy chart from Our World in Data.

FLI is a 501c(3) non-profit organisation, meaning donations are tax exempt in the United States. If you need our organisation number (EIN) for your tax return, it’s 47-1052538. FLI is registered in the EU Transparency Register. Our ID number is 787064543128-10.