Jaan Tallinn on existential risks

Contents

An excellent piece about existential risks by FLI co-founder Jaan Tallinn on Edge.org:

“The reasons why I’m engaged in trying to lower the existential risks has to do with the fact that I’m a convinced consequentialist. We have to take responsibility for modeling the consequences of our actions, and then pick the actions that yield the best outcomes. Moreover, when you start thinking about — in the pallet of actions that you have — what are the things that you should pay special attention to, one argument that can be made is that you should pay attention to areas where you expect your marginal impact to be the highest. There are clearly very important issues about inequality in the world, or global warming, but I couldn’t make a significant difference in these areas.”

From the introduction by Max Tegmark:

“Most successful entrepreneurs I know went on to become serial entrepreneurs. In contrast, Jaan chose a different path: he asked himself how he could leverage his success to do as much good as possible in the world, developed a plan, and dedicated his life to it. His ambition makes even the goals of Skype seem modest: reduce existential risk, i.e., the risk that we humans do something as stupid as go extinct due to poor planning.”

About the Future of Life Institute

The Future of Life Institute (FLI) is a global non-profit with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, FLI projects

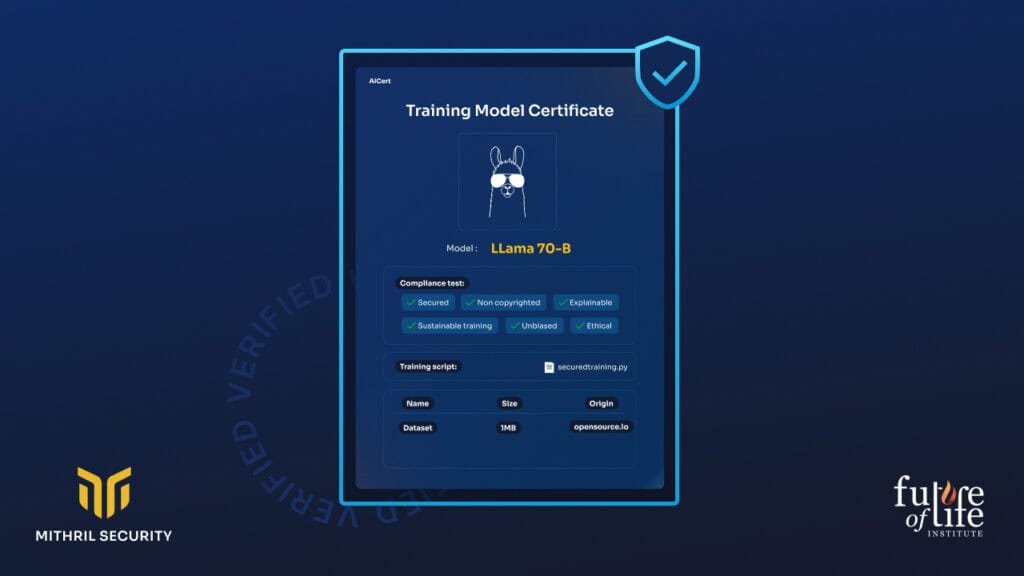

Verifiable Training of AI Models